Computer Chronicles Revisited 82 — Invisible Cities, DOM, TheaterGame, and Terpsichore

The topic of “computers and the arts” today largely focuses on the use of artificial intelligence to suck up the work of artists–often without their permission–and regurgitate it based on text prompts. No doubt the goal of many of these venture capital-funded projects is to eventually replace the human artists altogether.

Such concerns were still more science fiction than dystopian reality back in March 1987, when this next Computer Chronicles episode first aired. But the ever-wise George Morrow nevertheless cautioned that we should always ensure that machines serve the humans, not the other way around. Stewart Cheifet opened the program by showing Morrow, this week’s co-host, a series of black-and-white sketches produced by artist Harold Cohen using a plotter hooked up to a computer. Cheifet noted that some artists believed computers and art didn’t mix. What did Morrow think? Morrow disagreed. He thought that critics of using computers in the arts were getting the hardware mixed up with the software. He cited the film Star Wars as an example of artists creating effects with software. In art, there were a lot of repetitive tasks. Computers could be a marvelous tool for helping automate those tasks–provided the software was made by the artists and not some computer scientist.

“Now I’ve Had the Time of My Life…”

Wendy Woods presented her first remote report, which focused on Invisible Cities, a ballet that featured both human dancers and giant robotic arms. Woods said this performance was an example of the new collaboration between the human dancer and increasingly human robots. The programmer for this piece was Dr. Margo Apostolos, a professor and director of the dance department at the University of Southern California, who first got the idea from watching a robot used in a veterans hospital research project. Apostolos said when she first saw that robot at Stanford University, she was curious to see if something that was mechanical and very staccato and linear in its actions could be made to move “beautifully.”

Woods said that Apostolos had since produced several robotic dance works of her own (see below), some with and some without human partners. Apostolos discovered that robots could make a lasting impression. She said the response had been mixed in that the critics who saw the Invisible Cities performance in San Francisco felt the robots actually upstaged the dancers.

Another artist intrigued by robotic movement, Woods said, was Pamela Green of Stanford University. Her piece, Animal, Vegetable, or Mineral, began as a costuming project. Green said the form was moving and it reminded her of an animal form, particularly a bird. So she asked herself, “What if these things were really to be birds?” And then she tried that out in an experiment and it proved to be “too simple.”

Woods explained that Green’s work was for a single robot arm programmed to move within an interactive environment. Animal, Vegetable, or Mineral was only about eight minutes long. But it took a month of programming to successfully choreograph. Green said she didn’t want a passive robot with things happening to it. She wanted to explore what it was that gave something a spirit of animation, i.e., at what point was something “animal, vegetable, or mineral”?

A Dancing Word Processor

Eddie Dombrower joined Cheifet and Morrow for the first round table. Dombrower was president of Dom Dance Press, which developed a software program for choreographers known as DOM.

Morrow asked Dombrower for some personal background and how he came to write DOM. Dombrower said he studied both dance and computers in college. After graduating in 1980, he received a grant to go to England and study computers and choreography. At that time there wasn’t a whole lot being done in the field, especially not on small, portable computers. So he worked on using computer animation of human figures to represent dance notation. Cheifet noted this was easier than trying to describe dance notation in words. Dombrower agreed.

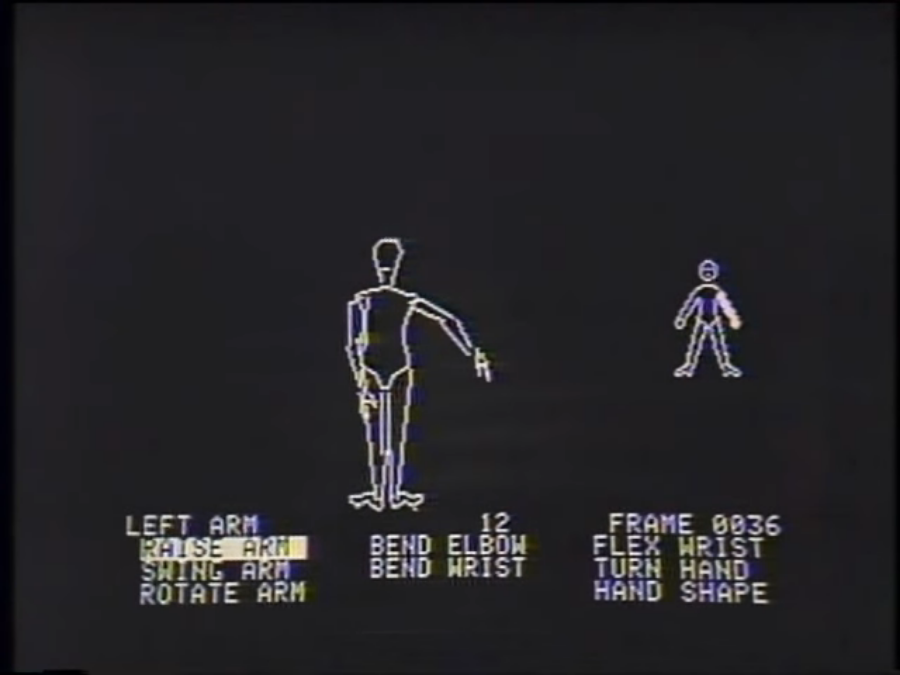

Dombrower then provided a demonstration using DOM running on an Apple IIc (see below). Dombrower explained the software’s editing system. You could move parts of the human figures by using menu-driven commands working in conjunction with the arrow keys. For example, you could select “Raise Arm” and then use the arrow keys to move the arm into a specific position. Dombrower noted that he was also working on a product for Electronic Arts, Earl Weaver Baseball, that used this same basic system to do character animation.

Morrow observed that when you finished with the character animation in DOM, you ended up with a dance sequence. Dombrower said that was correct. You saved each individual frame and then moved onto the next frame. Cheifet noted that DOM was effectively a “dancing word processor.” Dombrower agreed.

Morrow asked if DOM could be used by both choreographers and dancers. Dombrower said it could be used by choreographers or teachers, but mostly it was a tool for dancers to learn things that weren’t accessible because of their location.

Dombrower then showed a completed dance animation sequence, an “introduction dance” that included a “pedestrian move,” i.e., a walking animation, which he said was one of the more difficult things to notate. In this mode, DOM was akin to a video recorder. The user could play the entire animation or freeze frames. You could also rotate the figure to see the dance sequence from a rear angle. Morrow observed this made DOM even more flexible than a video tape. Dombrower said you could also edit the sequence to accommodate a less advanced dancer. The dancer could also repeat small sections of the animation over and over to learn a particular sequence or step.

Morrow asked about the feedback Dombrower received for DOM. Dombrower said the software was currently out on 15 beta testing sites, mostly teachers and universities, and he’d received very positive feedback. Morrow enthused this was an example of what he was talking about earlier–an artistic professional creating a program. Dombrower then concluded the segment by running another demo he created on DOM of a breakdancing routine.

The Play’s the Thing…

Charles Kerns and Larry Friedlander joined Cheifet and Morrow for the next segment. Both guests worked at Stanford University where they created TheaterGame, the subject of the next demonstration.

Morrow asked Kerns, a project leader with Stanford’s IRIS program, about his work. Kerns explained that IRIS was part of Stanford’s academic computing program and one of its projects was working with faculty members to develop instructional courseware. TheaterGame was one example of this. Morrow clarified that IRIS was a resource for people who weren’t necessarily computer literate. Kerns said that was correct. IRIS supplied equipment and programming expertise.

Morrow asked Friedlander to describe TheaterGame itself. Friedlander said the software was designed to help students with no theatrical background or access to a physical stage–only the text of a play–develop their imaginations for staging and spatial relationships. So when students read the play they could also help create the scene.

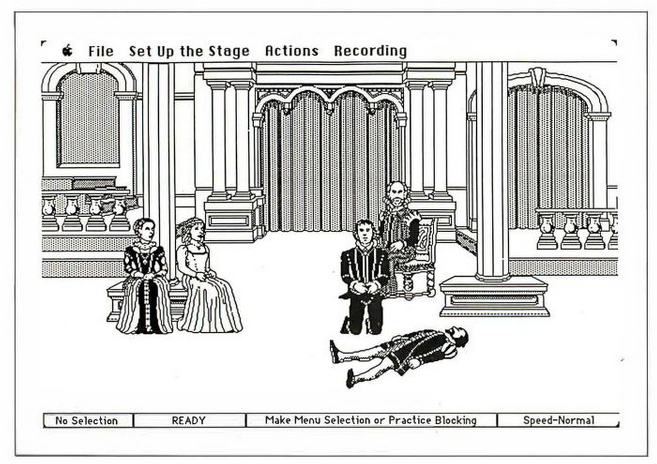

Friendlander showed an example of this staging using TheaterGame, which ran on a Macintosh Plus (see below). This was a scene from William Shakespeare’s Hamlet. In this scene–Act III, Scene 1, for you Shakespeare fans out there–Friedlander explained, Hamlet and Ophelia were attempting to reconcile. The software animated the characters along with the text of the play. The student would start by picking the stage and actors and props. Morrow said TheaterGame could therefore be used to actually give students an assignment to create this scene. Friedlander said that was correct. The student basically had to make all of the important decisions that a director or actor would make.

Friedlander continued to demonstrate the program’s features. Individual actors could be moved into one of four positions–standing, sitting, kneeling, or lying down. You could also move just the actor’s head. Morrow observed this wasn’t icon-driven so much as positional-driven without having to use the keyboard. Frielander said that was correct. Kerns had developed the program so that you only needed to use the mouse and click on a character to move them.

Morrow asked about the division of labor in making TheaterGame. Friedlander said Kerns did the actual programming. But Friedlander was essentially the designer. There was also a graphic designer, Marge Boots, who worked with the Macintosh regularly.

Cheifet asked Friedlander if TheaterGame was something that could actually be used by a director for, say, blocking out a play. Friedlander said it could be useful for a large scene where the director had to manipulate a lot of characters and work out the “traffic” beforehand. Morrow said taken to its logical conclusion, the software could be used to handle rehearsals. Friedlander said it could record everything that happened at a rehearsal. Right now, it was common practice for a stagehand to write all that information down by hand.

Photons Be Free!

Wendy Woods returned for her second report, which focused on a computer-based form of art, holography. Woods noted that television was a 2D medium, so the viewer couldn’t see what she was looking at: a stunning, moving 3D image. Holographic artist Sharon McCormack made these images by first capturing computer graphics on film. The process of transferring these images to holographic film was tedious and exacting, and for that job McCormack used an Apple II. The microcomputer drove the optical printer that made the holograms. (Based on the screenshot, the software was “Hologram Printer 2.1.”) The software controlled exposure time, running time, the stability of the optical printer, and the ratio of the two laser beams used to create the hologram. McCormack told Woods that because of the computer, she was able to input all of the different parameters for making a hologram from a keyboard.

Woods added that the original design was also created on a computer. Just a few years ago, creation of a rotating, textured image required the power of a Cray supercomputer. Today, it could be done on a small graphics workstation. McCormack said that with the computer, she could have whatever surface she wanted. She could change the scale or perspective. She could choose the “lens” in the computer without having to carve anything out.

Woods added that a new technique called “embossing” was being developed to put holograms directly onto non-photographic surfaces, such as paper, which would give the technology much greater exposure.

Recreating the Renaissance on a Macintosh

John Burke and Stacy McKee Mitchell joined Cheifet and Morrow for the final segment. Burke was a conservator with the Oakland Museum of California. Mitchell was CEO of Great Wave Software.

Morrow opened by noting it wasn’t obvious how a museum conservator used software in their work. Burke explained his role was to take care of the objects in the museum, including restoring and conserving them. Documentation was very important to that work. A conservator needed to know the condition of an object at all times, e.g., what was done to it, what had been done to it, and so forth.

Burke explained that he used off-the-shelf software–Microsoft File in conjunction with MacVision–to keep a picture of the objects that he worked on. He showed a selection from his conservation database, which was made in File on the Macintosh. When you selected an item from the database it pulled up a digitized picture of the art object. The digitized image could then show what part of the object was damaged or needed work, which Burke said was much easier than trying to explain it in words. The MacVision digitizer worked with both 2D and 3D objects, he added.

Cheifet then turned to Mitchell and asked about her company’s software, Terpsichore, which focused on music rather than physical art objects. Mitchell said the original Terpsichore was a collection of 312 instrumental dances compiled by German composer Michael Praetorius and first published in 1612. (The name “Terpsichore” refers to the ancient Greek goddess of dance.) The Terpsichore computer program by Richard Ray was a subset of 181 of those 312 compositions on floppy disk, including the digitized Renaissance instruments necessary to play them.

Mitchell went on to explain that Terpsichore was an add-on to another Great Wave music software program, ConcertWare Plus. She added that 80 percent of the music on Terpsichore had never been published in auditory form before, only as written compositions. Morrow noted that many of the Renaissance instruments were also not readily available today. Mitchell reiterated that the software recreated the sounds of those instruments.

Mitchell then ran Terpsichore on a Macintosh Plus in conjunction with a Casio CZ-101 synthesizer that communicated via a MIDI interface. The software played one of the Praetorius tracks, Ballet de la Royne, which included eight instruments: a Rackett, a Recorder, a Krummhorn, a Viola da Gamba, a Regal, a lute, a Bombarde, and a true harpsichord.

Morrow asked how many instruments could be played at one time. Mitchell said it depended on the synthesizer. The Casio CZ-101 could play only four at a time. But the software could support up to eight instruments. Some Yamaha synthesizers could support all eight at once.

Cheifet asked about the market or application for Terpsichore. Mitchell said the primary audience was Renaissance music enthusiasts. Some of the universities also used it for their early music programs. Cheifet asked about the unique contributions offered by the computer. Mitchell said the computer was able to record something that previously could not be recorded because the original instruments were no longer available in large quantities.

Turning back to Burke, Cheifet asked if he’d ever considered designing software to handle his conservation work as opposed to using off-the-shelf software. Burke said yes, his museum had been talking about doing documentation using artificial intelligence to help figure out where damage existed on a particular art object. That would make it possible for a number of people to do something that right now required an expert.

Apple Cut Prices as SE/30 Launched

Cynthia Steele presented this week’s “Random Access.” The episode available from the Internet Archive is a rerun of the original March 1987 episode. So this news segment was from January 1989.

- Apple announced price cuts for its Macintosh line. The price of a Macintosh SE with a 40 MB hard drive was now $4,369 (down 14 percent). The Macintosh II with the same hard drive was now $7,369 (down 9 percent). And the Macintosh IIx with an 80 MB hard drive was now $7,869 (down 16 percent).

- The new Macintosh SE/30 had received generally good reviews. Steele noted the SE/30 had a different expansion slot architecture than the original SE. This meant the SE/30 could use cards designed for the Macintosh II but not the original SE.

- Jasmine Technologies announced a new erasable optical disk drive. Steele said the new unit was a 5.25-inch drive with a 600 MB capacity and an average seek time of 50 milliseconds. The price was under $5,000.

- Verbatim announced its own 3.5-inch erasable optical drive with a claimed average access time of 30 milliseconds. Steele said there was no word on price or shipping date.

- Unisys introduced what it was calling the first “desktop mainframe,” the Micro A, which would be capable of running MS-DOS programs.

- Lotus Development Corporation unveiled its new hard disk management program Magellan, which allowed users to search by keywords and combine files or portions of files. Steele said Magellan would ship in April 1989 for a list price of $195.

- Lotus also reported that its fourth-quarter 1988 earnings were down 60 percent–from $23 million to $9 million–from the same period in 1987.

- Hungarian game designer Ernő Rubik said he would not license his puzzles, including the classic Rubik’s Cube, to any computer game publishers.

Bringing Theater to the People

Larry Friedlander has been associated with Stanford University for nearly 60 years. Friedlander spent most of his career as an English professor. And as this episode illustrated, he has often been at the forefront of embracing new methods of bringing theatrical experiences to the public.

For example, in 1969 Friedlander advised an undergraduate student drama group that would stage free “community theater” performances inside Stanford dorms. He also planted “spontaneous actors” in his contemporary drama courses to intentionally stage disruptions while he lectured. A year later, in 1970, Friedlander spent six weeks touring small towns in seven states where he and three colleagues produced a variety of “dance, poetry, mime, lectures, and folk songs” for local audiences, according to the Palo Alto Times.

Fast forward to the mid-1980s and Friedlander told the San Francisco Examiner that he got the idea for what became TheaterGame while teaching a course “that stressed the performance aspect of Shakespeare’s plays.” Friedlander approached Stanford’s Faculty Author Development program, where Charles Kerns worked. Kerns was then assigned the task of turning Friedlander’s concept of helping students visualize the text of Hamlet into TheaterGame.

The development process took about two years, according to Kerns. He told the Examiner The most difficult part was “creating a design that would let the student control the characters” in a manner that was “as simple as driving a car.”

TheaterGame was distributed commercially, at least on a limited basis. The Examiner said the program was available for $25 at a Kinko’s store in Berkeley, California. Friedlander and Kerns also discussed incorporating TheaterGame into a potential LaserDisc package that would include various clips from filmed versions of Hamlet, but I don’t think that ever came to fruition.

Kerns actually left Stanford in 1989 to join Apple for several years as a senior research scientist with the Apple Classroom of Tomorrow project. He returned to Stanford in 1999 as associate director for the school’s Learning Laboratory and remained with the university until he retired in the mid-2010s. Starting in 2011, Kerns authored a series of mystery novels known as the Oxaca Mysteries.

Notes from the Random Access File

- This episode is available at the Internet Archive. The original production date was likely March 3, 1987. But as previously noted, the recording from the Archive is a rerun dated January 27, 1989.

- Eddie Dombrower appears in another Chronicles episode to discuss a program he referenced in this appearance, Earl Weaver Baseball, so I’ll defer delving into his background until later.

- Harold Cohen, the artist whose work Stewart Cheifet referenced in the studio introduction, was best known for creating AARON, one of the first artificial intelligence programs designed to produce art. Cohen was a professor at the University of California, San Diego, for three decades and served as the school’s Center for Research and Computing until his retirement in 1998. Cohen died in 2016 at the age of 87. The Computer History Museum published an article by Chris Garcia memorializing Cohen and his work with AARON following his death.

- Dr. Margo Apostolos is still affiliated with the University of Southern California as a professor of dance. Since 2007, she’s concurrently served as the co-director of the Cedars-Sinai/USC Glorya Kaufman Dance Medical Center in Los Angeles, which she co-founded with orthopedic surgeon Dr. Glenn Pfeiffer. Apostolos also authored Dance for Sports: A Practical Guide, which was published in 2018.

- Sharon McCormack graduated from Humboldt State University–now Cal Poly Humboldt–with an art degree focused on photography. She transitioned to holography after taking a class at the San Francisco-based School of Holography in 1970, which she later took over as owner and director from 1975 to 1989. Two of her most commercially famous works were published in 1991: a Sports Illustrated cover featuring an image of Chicago Bulls star Michael Jordan, and the cover art for Prince’s album Diamonds and Pearls. McCormack later relocated to Washington State and continued to work in holography well into the 2000s. She reportedly passed away in 2016.

- John Burke retired in 2015 from his role as chief conservator at the Oakland Museum of California. Following his retirement he taught at the now-closed John F. Kennedy University and the Tainan National University of the Arts in Taiwan.

- Stacy Mitchell and her husband founded Great Wave Software in 1984. Based in Scotts Valley, California, Great Wave largely became known for developing educational software, including Reading Mansion and NumberMaze Challenge. In January 1999, the Mitchells sold Great Wave to the Ideal/Instructional Fair Publishing Group, a division of the Chicago-based Tribune Company.

- Invisible Cities debuted in December 1985 at Stanford University’s Memorial Auditorium. Brenda Way was the choreographer and Michael McNabb composed the music for the ballet, which was developed in conjunction with Stanford’s Robotic Aid Project. Margo Apostolos helped program Way’s choreography into “Howard,” a 120-pound seven-jointed robotic arm that cost $50,000. (I believe this is the same Stanford robotics project featured in a February 1985 Wendy Woods report that I covered in a previous blog post.)

- The news item about Ernő Rubik refusing to license his “Rubik’s Cube” for computers puzzled me at first because I remembered hearing about a version of the famous game for the Atari 2600. Indeed, Atari, Inc., published Video Game Cube for the 2600 in 1982, which was an unlicensed knockoff. Atari did briefly acquire a license to use the “Rubik’s Cube” name around 1984 but that only lasted a short time. There have apparently been a number of computer games since then using the “Rubik’s” name, so it looks like Rubik later reconsidered his position.